Meta Superintelligence Labs

prajj at meta dot com

Bio

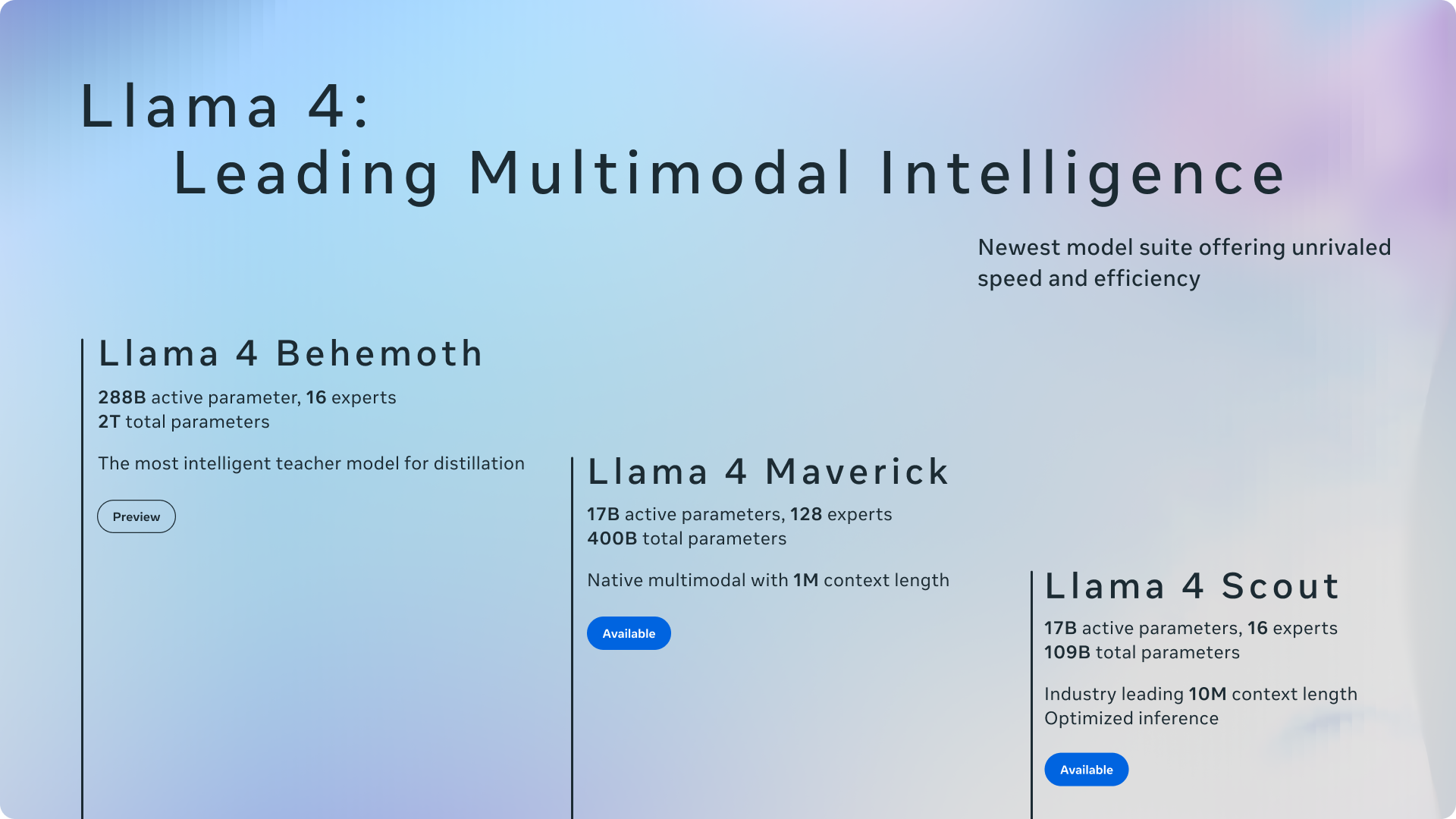

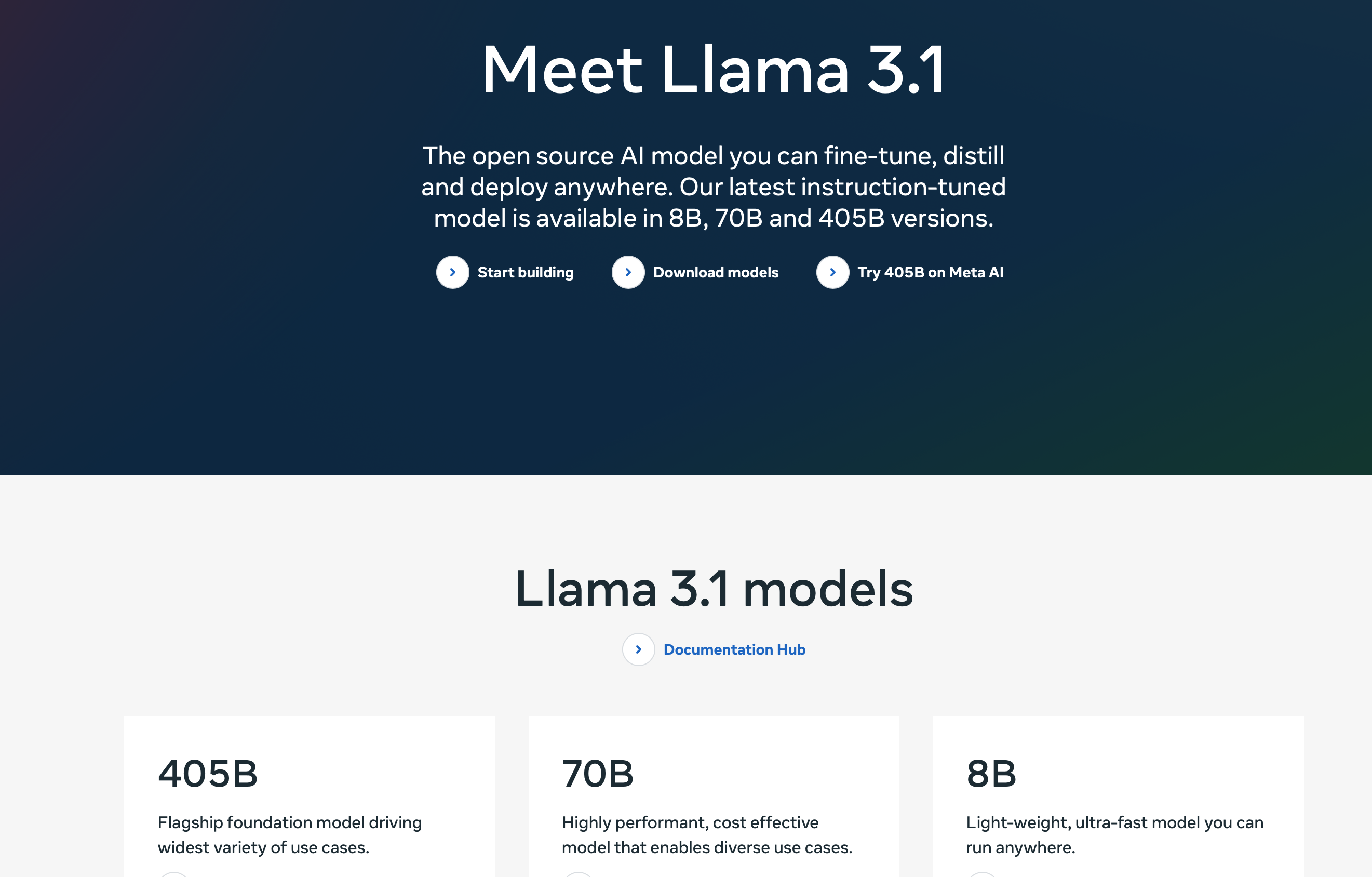

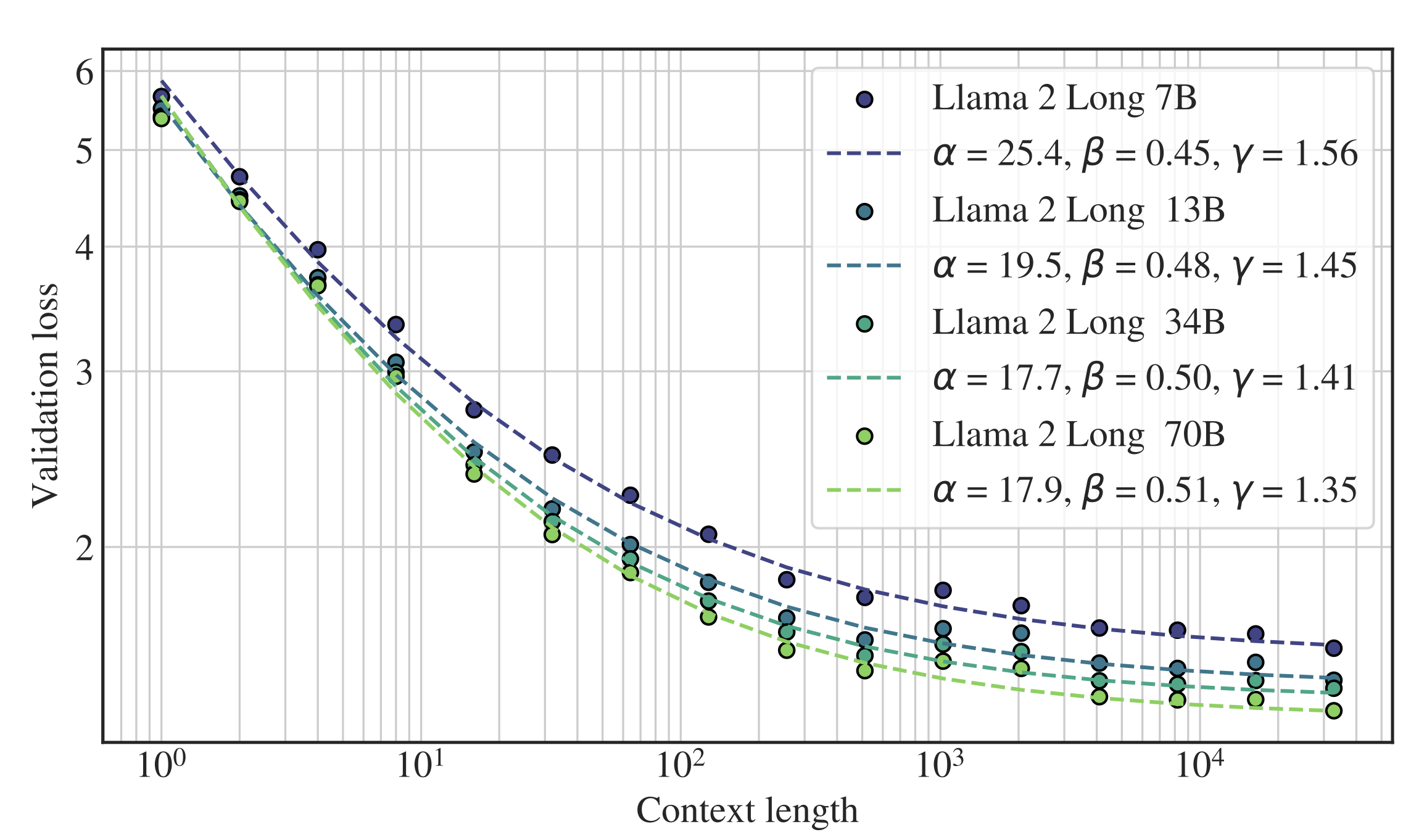

I’m Praj, I work as an AI Researcher at Meta Superintelligence Labs working on building foundational models. I am a core contributor of LLaMA 4, LLaMA 3 LLaMA 2, LLaMA 2 Long, powering Meta’s flagship AI assistant meta.ai. My primary focus has been on fundamental pre-training research and infra around it. One of my primary contributions include building long context capabilites for LLaMA 4 both on modeling and pre-training infra / inference. LLaMA 4 can attend to documents exceeding 10M tokens. Previously I worked as an AI Resident within Reality Labs and Fundamental AI Research (FAIR) at Meta working on Offline Reinforcement Learning. My google scholar can be found here.

Prior to Meta, I was a CS graduate student at the University of Texas Dallas where I worked on commonsense reasoning under the supervision of Prof. Vincent Ng. My thesis is about improving commonsense reasoning through adversarial learning.

Publications

BTS: Harmonizing Specialized Experts into a Generalist LLM

The Llama 4 herd: The beginning of a new era of natively multimodal AI innovation

The LLaMA 3 herd of models

Correlating and Predicting Human Evaluations of Language Models from NLP Benchmarks

Effective Long-Context Scaling of Foundation Models

Llama 2: Open Foundation and Fine-Tuned Chat Models

Paper

Official Announcement

Code

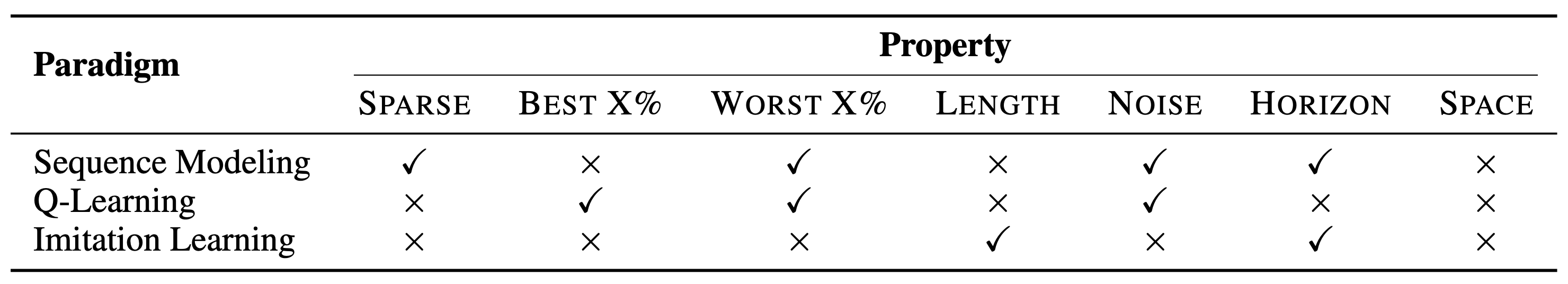

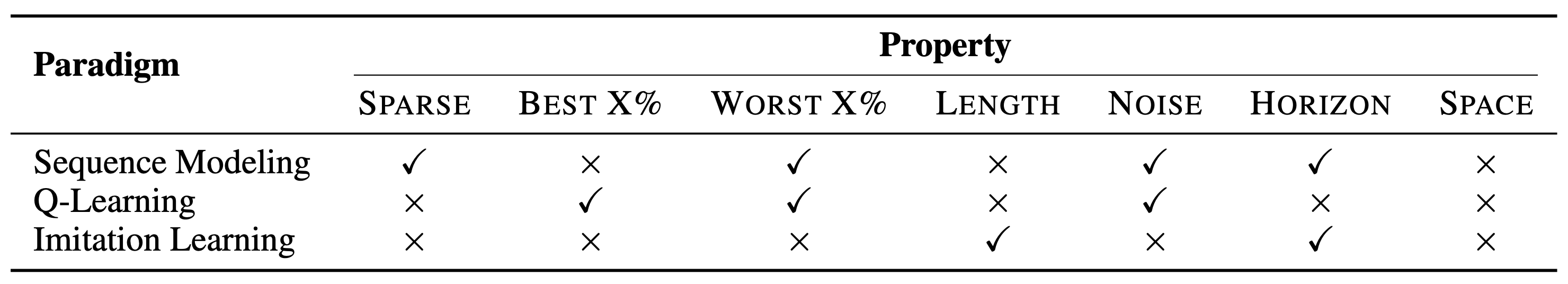

Sequence Modeling is a Robust Contender for Offline Reinforcement Learning

International Conference on Learning Representations (ICLR) 2024

arXiv

Code

Bibtex

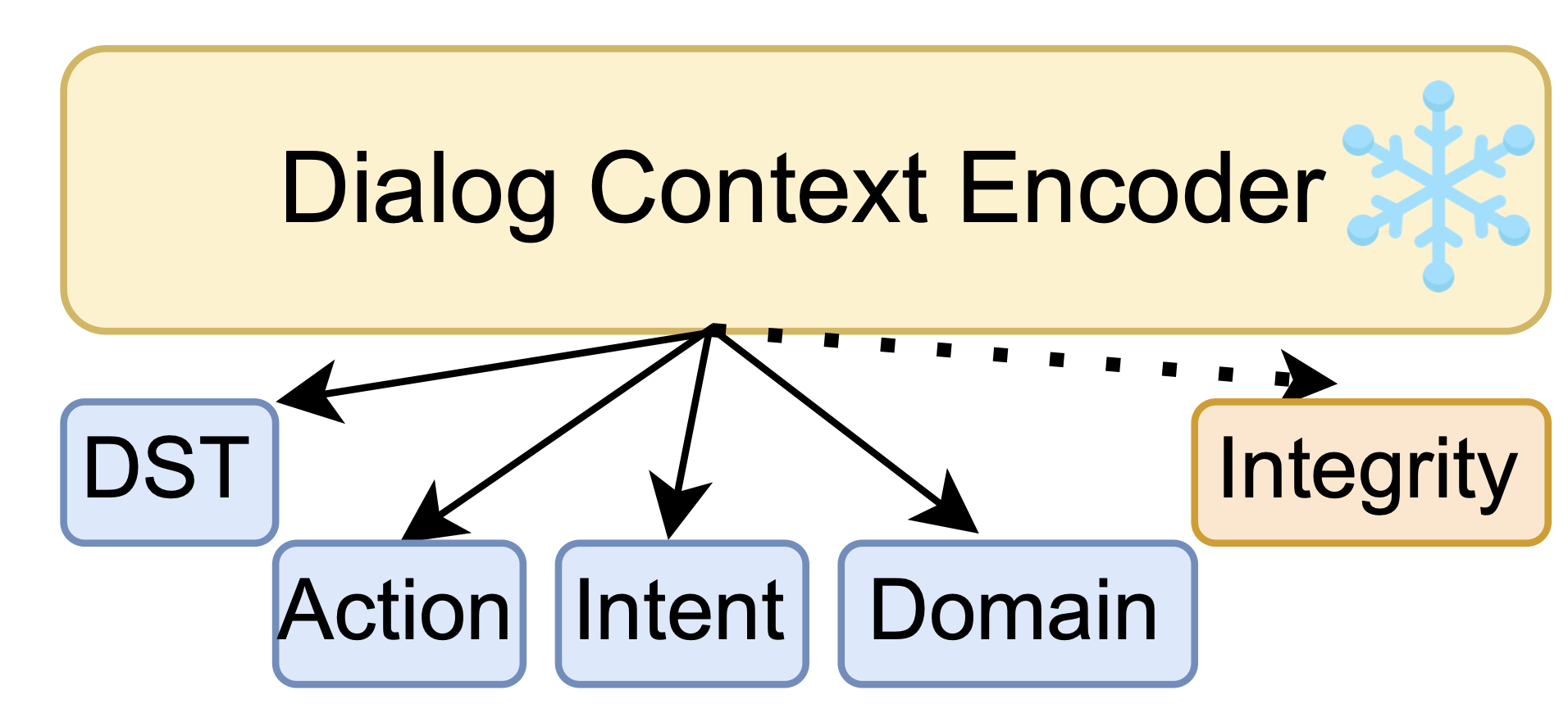

AUTODIAL: Efficient Asynchronous Task-Oriented Dialogue Model

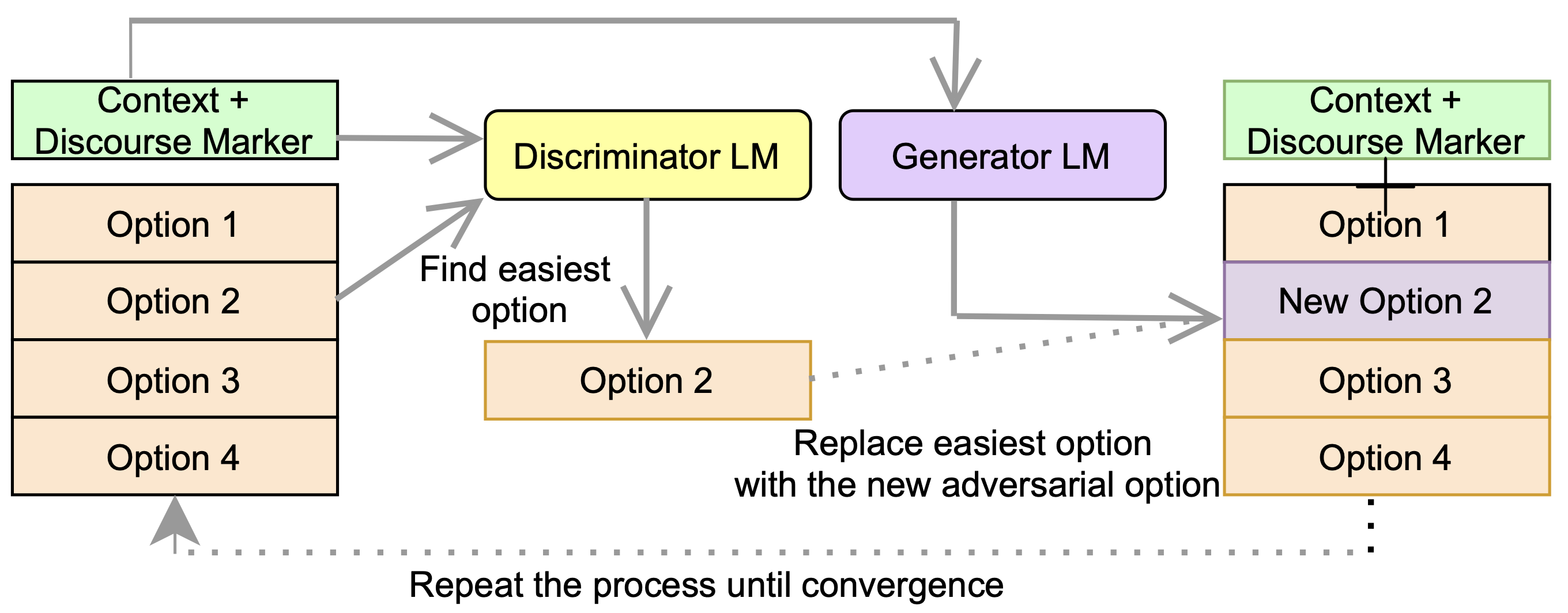

DiscoSense: Commonsense Reasoning with Discourse Relations

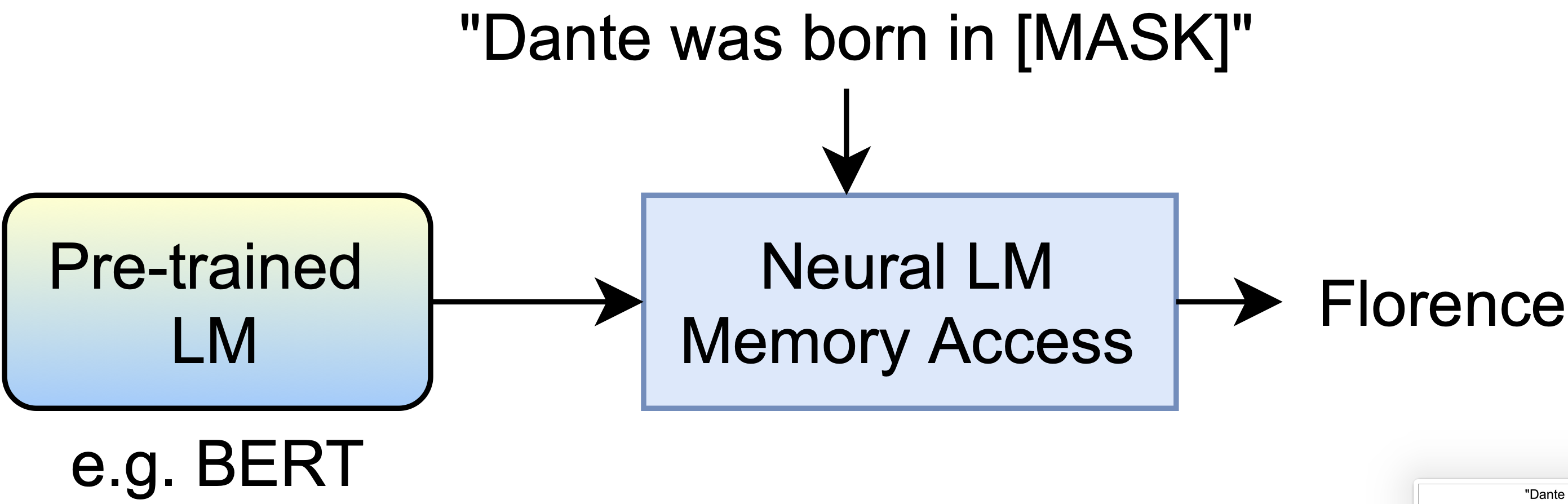

Commonsense Knowledge Reasoning and Generation with Pre-trained Language Models: A Survey

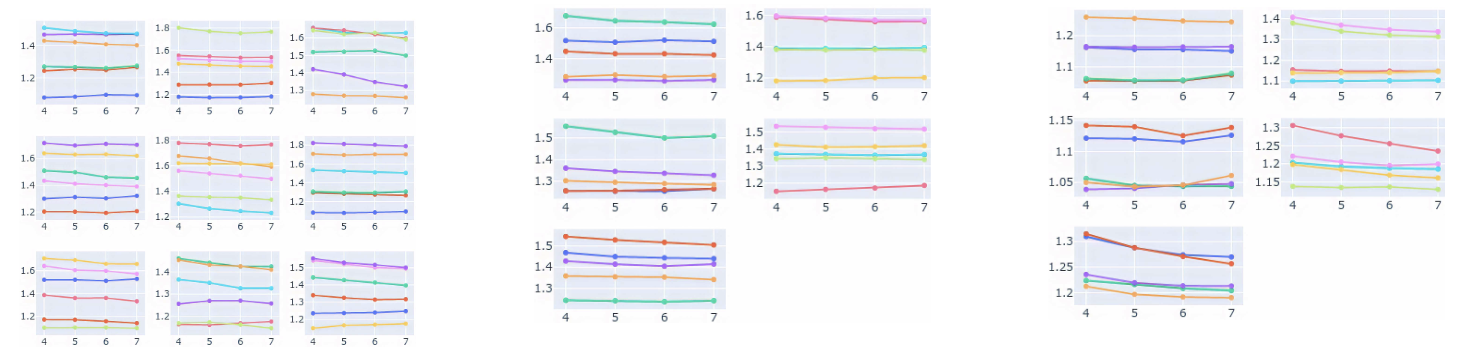

Generalization in NLI: Ways to [Not] Go Beyond Simple Heuristics

EMNLP Workshop on Insights from Negative Results 2022

Paper

Code (Huggingface)

Code (Pytorch Lightning)

Bibtex

Presentation video

Poster

Slides

Adaptive Transformers for Learning Multimodal Representations

ACL SRW 2022

Paper

Code

Bibtex

Presentation Video

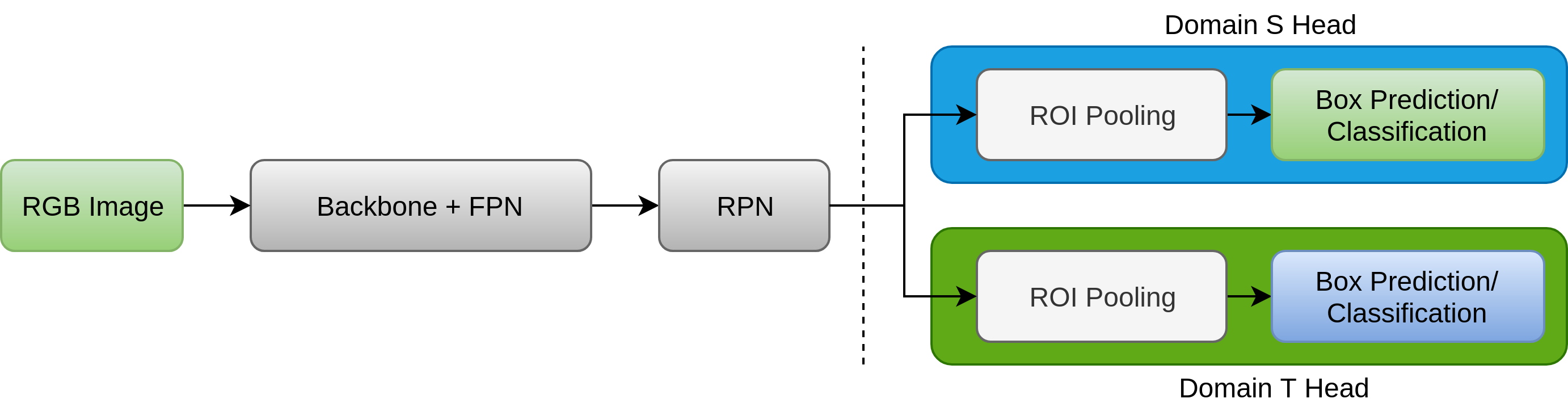

On Generalization of Detection Models for Unconstrained Environments

ICCV AutoNUE Workshop 2022

Paper

Code

Bibtex

Poster

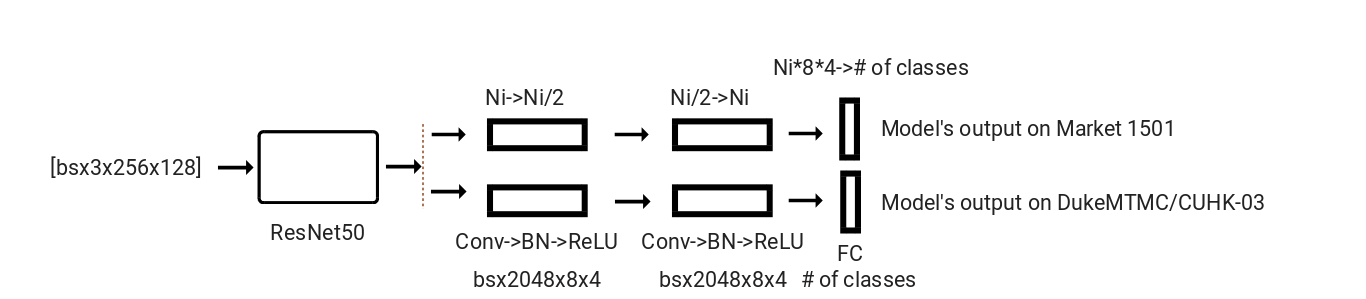

Incremental Learning in Person Re-Identification

arXiv preprint

Paper

Code

Bibtex

Poster

Side projects

fluence

Winner of Pytorch Global Hackathon 2020. A Pytorch deep learning library focussed on

providing support for compute efficient and debiasing algorithms in transformer based

model for NLP research. Contains implementation of Adaptive Attention, Sparsity, Layerdrop,

Debiasing, Pruning utilities etc.

Autonomous Object Detection

This project focussed on 2D object detection with Pytorch.

User can leverage models provided from `torchvision` and use datasets provided in this project (`idd`, `cityscapes`, `bdd`)

for training and evaluation of models. Additionally, support for incremental learning was added.